5 Why Code?

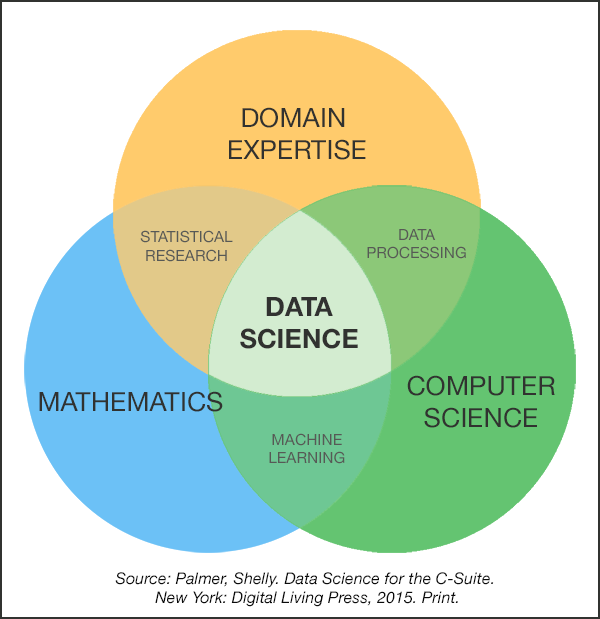

MDSI students have no doubt been presented with several versions of this Venn diagram, which purports to portray the three essential elements of data science:

Whilst this is obviously not a complete picture of data science, it does serve to reinforce the key message of the next few chapters which is that programming is a key skill for a Data Scientist; if you aren’t already comfortable working with code then you should be working towards developing programming skills before you graduate. Every Data Scientist job advertisement asks for programming skills, and in most cases they’re looking for a combination of R, Python and SQL. They might also ask for Hive, Spark or something else, but if you know your way around SQL and either R or Python you’re most of the way there.

Practically all Data Scientists use programming languages for at least some of their work, and most would do so every day.

It is worth noting that the requirement for programming skills does not mean that everything you do needs to be achieved using code - you should always use the best tool for the job and there will be plenty of times where that tool might be a spreadsheet, Tableau, or even just pen and paper. However, there will certainly be many times where a programming language is the best tool for the job, and the more comfortable you are with programming the easier your life will be.

This chapter will therefore aim to convince you that using programming languages will normally make your life easier, make your analysis more powerful, make your findings more reproducible, and make you a better Data Scientist.

Further Reading:

Data Science is Different Now by Vicki Boykis.

Key Points:

[Junior Data Scientists] come in with an unrealistic set of expectations about what data science work will look like. Everyone thinks they’re going to be doing machine learning, deep learning, and Bayesian simulations.

This is not their fault; this is what data science curriculums and the tech media emphasize. Not much has changed since I first glanced, starry-eyed, at Hacker News logistic regression posts many, many moons ago.

The reality is that “data science” has never been as much about machine learning as it has about cleaning, shaping data, and moving it from place to place.

Along with data cleaning, what’s become more clear as the hype cycle continues its way to productivity is that data tooling and being able to put models into production has become even more important than being able to build ML algorithms from scratch on a single machine, particularly with the explosion of the availability of cloud resources.

What is becoming clear is that, in the late stage of the hype cycle, data science is asymptotically moving closer to engineering, and the skills that data scientists need moving forward are less visualization and statistics-based, and more in line with traditional computer science curricula.

- Learn SQL.

- Learn a programming language extremely well and learn programming concepts.

- Learn how to work in the cloud.

5.1 Hypothetical Example

Consider a task where you’re given a CSV file and asked to answer some questions for a business stakeholder. The questions are fairly simple, and you can realistically use any tool you like. Let’s consider three courses of action:

A. Spreadsheet

You open the CSV using Excel, then save the file to convert it to an Excel file so you can use multiple tabs. You notice that Excel has messed up the dates during the import, so you spend a few minutes tidying them up manually. You then create a few new tabs with visualisations, spot a few outliers, and confirm that these outliers are due to errors in the data. You delete the rows. You then need to use filters to calculate some summary values, so you use the Auto-Filter function in Excel and record the results in a new tab.

All good, right?

- What happens if your stakeholder comes back in a month and asks you to show them how you calculated the answers? Will you remember all of the steps?

- What happens if they loved your work and want you to repeat your analysis with new data from this month? How much re-work is involved?

- What happens if someone “made a few corrections” and sends it back to you? How will you know what they changed?

Spreadsheets are a nightmare for quality control and reproducibility, and you should always think twice before using one. Spreadsheets will always be a handy way to manipulate tabular datasets, and you’ll probably find them useful for data collection and quick back-of-the-envelope calculations, but they’re often more trouble than they’re worth.

This is not to say that you should never use spreadsheets, just that you should pause and reconsider whether you’re setting yourself up for a problem in future.

B. Tableau

You import the CSV using Tableau, and build some dashboards to help answer the business questions. Some of the questions required logic that wasn’t immediately available in Tableau, so you used Calculated Fields to get what you need.

Tableau does some things really well:

- It doesn’t let you manually modify data

- It keeps a record of every transform you perform

- It lets you view the raw data that contributed to the plot you’re looking at (this is a very cool feature)

- It lets you re-import new data so you can save time if you’re doing the same task over and over again

but it still has it’s problems:

- The most complex transforms are trapped in Calculated Fields which have their own language and are hard to trace and debug

- The logic for how a particular view was constructed is non-linear - you could have dependencies in the data source, in other tabs, in other dashboards, etc.

- It makes it easy to do silly things (for example it would be silly to make business decisions based on the time-series forecasting module in Tableau)

- It is hard to customise the look and feel (and the people that are good at doing this are very expensive to hire!)

Tableau solves a lot of the problems encountered when using spreadsheets, and it’s definitely a powerful tool for solving many problems. It’s not however a one-stop-shop for data science, and most data science projects require a lot more power than you can get from Tableau.

C. R (or Python)

You import the CSV using readr which will automatically guess the type of each of the columns coming in, but allows you to specify override settings (in code!) if it’s guessing wrong. You can specify exactly how you want it to parse the date field, and you can use dplyr to perform all of the filters and remove the problematic rows using easy-to-read filters. You can use ggplot to create the visualisations, which gives you complete control over the appearance and design of the plot.

Most importantly:

- All of the steps you performed are now documented in code, which means you will always be able to explain how you came to the answer.

- To re-run your analysis on new data, you only need to change one line of code (the line where you selected the file to read in)

- If someone makes changes to your code, you can use any number of tools to

understand exactly what they changed. On your local machine you can use

diffbut we’ll cover more advanced (and easier to use) tools later in this course.

The key point I’m trying to make here is that there are many situations you will encounter as a Data Scientist where using a programming language is not the only way of completing a task, but you should always consider whether you can use a programming language because of the clear benefits to traceability, reproducibility and reusability.

As you’ll discover throughout this chapter and beyond, you can use code to do all of the following tasks:

- Data extraction and querying

- Data import and cleaning

- Data analysis

- Modelling and machine learning

- Visualisation

- Interactivity and animation

- Interaction with other applications, including production processes

- Describe and construct a reproducible data science environment

- Tell a story with data

- Collaborate with your team

- … pretty much anything you want to do can be done with code.

We’ll cover how to go about doing many of these throughout this chapter.

Case Study

At various points in the past five or so years you may have heard that mandatory helmet laws in Australia (which apply to cyclists) have not resulted in any lives being saved. This “fact” has been widely cited in research and policy documents, and has been referred to extensively throughout public discourse on the compulsory use of helmets. Unfortunately, the author of the paper in which this counter-intuitive finding was published revealed that the entire analysis was completed using Excel, and a subsequent review by another researcher found that:

- the data was entered into the spreadsheet with errors

- there was an error with the way the “trim and fill” adjustment procedure was performed

The first error turned out to be immaterial, however the second error caused the conclusion of the paper to be entirely erroneous - the author asserted that mandatory helmet laws had no impact on safety of cyclists, yet a new analysis of the data (using R instead of Excel) suggests that they have indeed made a significant, positive impact.

The researcher who discovered the errors wrote a very detailed account of the issues and how he went about trying to reverse-engineer someone else’s Excel file, and you can read that report here.

It is worth noting that even with the paper having been corrected in the journal where it was published, the impacts of the error are still present in public discourse around mandatory helmet laws in 2019. Using Excel might seem like a harmless decision at first, but the ramifications of a mistake made in an Excel spreadsheet can persist for over half a decade.

This is far from an isolated example - the Sydney Morning Herald ran a story The Excel Depression about how a flawed Excel analysis encouraged countries to pursue austerity policies based on a finding that was never able to be reproduced.

Spreadsheets. Just say no!5.2 What You Will Learn

In this section of the course we’re going to learn about programming from a systems level, looking at the different languages you’ll encounter and the way those languages typically get used in data science.

We’ll start by learning about Git, which has become a de facto standard for source control for Data Scientists (and most programmers too). We’ll cover the basics of Git so that you and your team can practice using it throughout the rest of the course. The first assignment (1A) will get you working with Git as a team.

Then we’ll move on to learning about the three core languages for data science: SQL, R and Python. You’ll hear plenty of banter between fans of each language, but the reality is you’re only making things hard for yourself if you can’t work in a team using more than one of these languages. We’ll look at each language from a high level, looking at how each of them helps you perform different sorts of tasks, and where they can be deployed. We’ll also include a primer for each language - this course won’t make you an expert but by the time we finish you should be able to write basic code in all three languages, and understand more complex code written by others. We’ll specifically focus on languages and paradigms which support data science.

Along the way we’ll look at how we can use different features of each language to help maintain readable, reproducible data science projects. The second assignment (1B) will task you with refactoring a data science project using what you have learned to improve the readability, reproducibility and maintainability of a project.

Finally we’ll look at how to work with multiple languages in the same team, and even in the same project. We’ll look at popular ways of connecting between SQL and R/Python, and effective techniques for deploying these languages in production. The final assignment for this section (1C) will task you with combining all three languages to solve a data science problem as a team.