9 Programming Concepts

This chapter will cover common concepts and best practices that will apply to all of the languages we have covered so far.

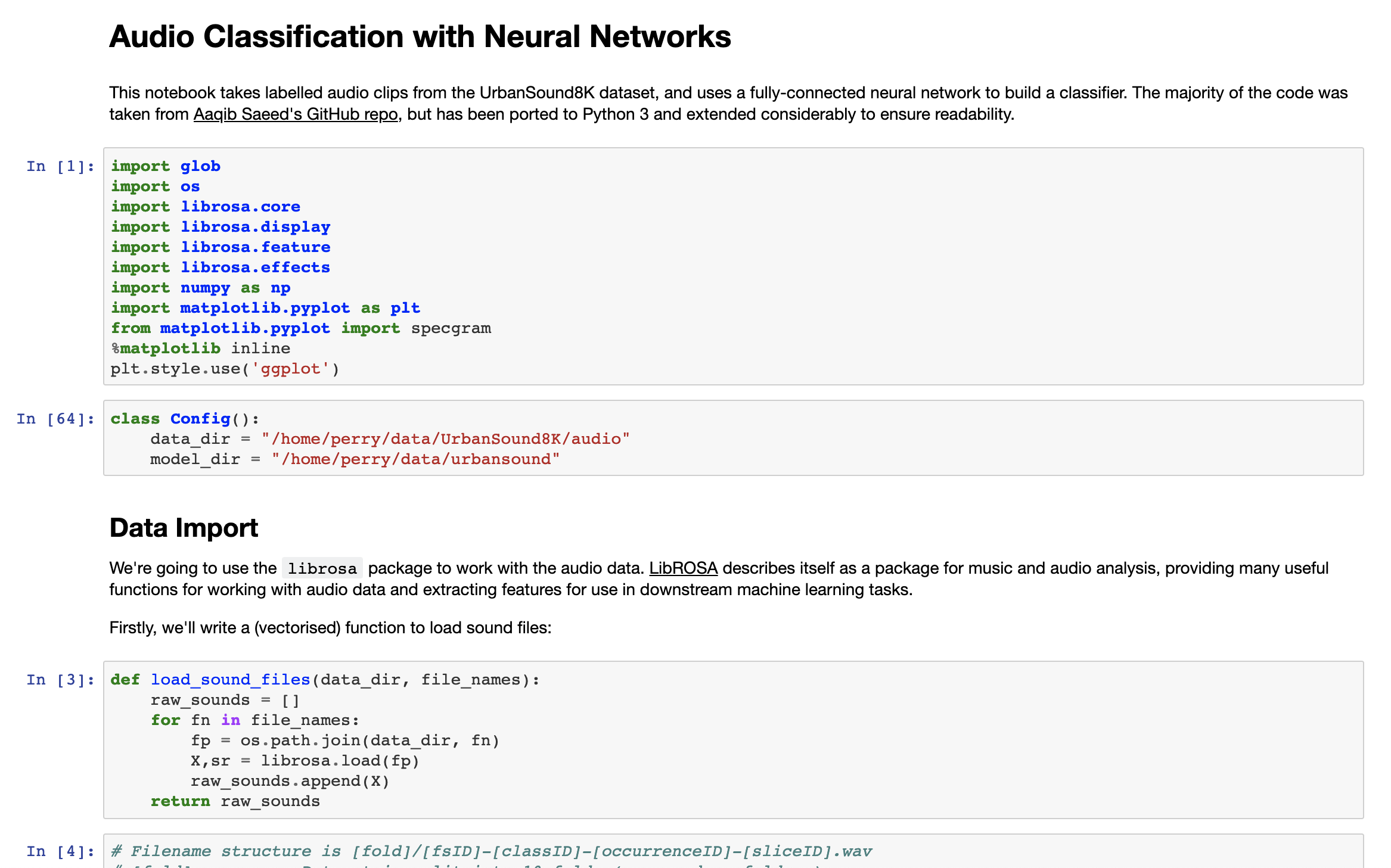

9.1 Notebooks

Whilst Notebooks have only really become popular in the past few years, the idea has been around for a while - if you have ever use Wolfram Mathematica or MAPLE then you have probably come across notebooks before. In the past few years they have become very popular for data science and you may have heard of one or more of the following Notebook offerings:

- Jupyter Notebooks

- RMarkdown Notebooks

- Apache Zeppelin

- Databricks

- Google Collab Notebooks

- Amazon SageMaker

Notebooks are an example of Literate Programming - a program is written as an explanation of the program logic in a natural language (in our case English), interspersed with snippets of code. You can think of this like a “lab notebook” where you record your procedures, data, calculations and findings. Importantly, when used appropriately they can make it much easier to reproduce results and findings because the reader has everything they need to understand what you did in your project.

9.1.1 Good points

When used carefully, notebooks can help improve your workflow (and team collaboration) in many ways:

- Mixing markdown and code encourages thorough documentation

- Inline plotting means that your plots appear in context, rather than popping up in a window somewhere else on your screen

- If you meticulously record everything you do in the notebook, it becomes an excellent way to support reproducible data science

- They’re normally web-based, which makes collaboration much easier than when writing scripts in an IDE

To maximise the benefits of notebooks you should be careful to make sure you:

- Record which version of each package you’re using as part of the notebook

- Use variables to set any configuration which is system specific at the top of

your notebook.

- For example, if you are loading files from a directory on your computer, put the directory path into a variable at the top of the notebook

- When someone else wants to reproduce your results they just need to change the path to match the location of the files on their own computer, and the rest of the script should just work

- Make sure you always run your notebook cells in order, and restart the kernel regularly to make sure you aren’t relying on any “hidden state”

- Write about why you are doing things, as well as what you are doing.

- Provide expected intermediate results along the way, so that anyone running your code can check that they’re getting the same results as you at every step

9.1.2 Bad points

For all of the benefits of notebooks (there are many!), there are also some drawbacks. The most famous (and entertaining) summary of the issues with notebooks was presented at JupyterCon 2018 by Joel Grus, and is worth watching.

To summarise Joel’s issues with Notebooks:

- Notebooks encourage you to run code out-of-order, which makes it hard to debug and can cause all sorts of problems with understanding

- Notebooks discourage good programming practices (modularity, testing, etc)

- It’s hard to look at help documents

- Most people don’t record versions of packages, which makes notebooks hard to run on anyone else’s computer

- Most people don’t identify all the assumptions they’ve made about their own system (file paths, etc), which makes notebooks hard to run on anyone else’s computer

- Notebooks encourage bad and buggy code, therefore actually hinder high quality and reproducible science

- Once code is in a notebook, it is hard to get it out again

- Notebooks make it easy to teach poorly

9.1.3 Should you use notebooks?

Yes.

Yihui Xie (creator of rmarkdown) made some excellent points in this blog post about why notebooks are worth using, despite the potential drawbacks identified by Joel Grus.

I think notebooks are more suitable for a world where the data analysis culture is stronger than the engineering culture. Joel insists that even if you are only experimenting or prototyping, you should follow good software engineering rules (slides #46-49). I tend to disagree, because prototyping is prototyping, and engineering is engineering. Good software engineering is important, but I don’t think it is necessary to write unit tests or factor out code at the prototyping stage. It is fine to do these things later, but again, I agree with Joel that if you are going to develop software seriously later, you’d better leave the notebook and use a real editor or IDE instead (e.g., to write reusable modules or packages).

How about doing data analysis in notebooks? Is “good software engineering” relevant? I’d argue that it is not highly relevant, and it is fine to use notebooks. Analyzing data and developing software are different in several aspects. The latter is meant to create generally useful and reusable products, but the former is often not generalizable—you only analyze a specific dataset, you want to draw conclusions from the specific data, and you may not be interested in or have the time to make your code reusable by other people (or it may not be possible).

I think Yihui has hit the nail on the head: notebooks are great for analysis and experimentation. If your project has a need for reproducibility (and it probably will - we are doing science after all) then there are some fairly easy steps you can take to make your notebook reproducible. Once you’ve done your analysis and experimentation, and you want to move to building something for production, then you’re better off moving away from notebooks and writing more traditional code using an IDE.

You will get exposure to both styles of programming through the assignments for this course.

9.2 Modules and Packages

Whilst most of your coding will be done in notebooks, you’re still going to be using other people’s code on a daily basis. It’s important to have an understanding of what “good” practice looks like for software engineers so that you can understand how to use other people’s code in your own projects.

You won’t be expected to learn how to write your own packages or modules as part of this course - links will be provided if you want to go down that path on your own.

The purpose of this section to make you familiar with some of the patterns you will see when working with packages and modules written by professional programmers.9.2.1 Python Modules

A “module” in Python can be as simple as a single Python script which provides a function. Let’s look at a simple example.

This module has one single function fib() which takes an integer argument

n and prints out the fibonnaci series up to that number. If I want to run

this code in another script (the scripts need to be in the same folder) then I

can just write:

0 1 1 2 3 5 8 13 21This shows how easy it is to break up your own code into modules (it’s very easy!) - it’s really just a snippet of Python code in a file, and some easy syntax you can use to import it into another script. If you were writing your own code and wanted to make break it up into smaller files (to improve readability), you could put each of your functions in modules and then import them into your main script when you need them.

If you see other people’s modules however, you’ll see a few weird things that I haven’t explained here. The most common thing you’ll see is something like this at the end of the module:

This will take a bit of effort to explain, so before we jump in and say what it does: this is the script that will run if you call the script from the command line. For example, if I added this to my_module.py and then ran the following command on my computer:

You can see that running my_module.py 25 was the same as running fib(25)

within a script. Looking at the code step by step:

if __name__ == "__main__":is an if statement that will run the commands below if__name__is set to__main__.__name__is a special Python variable which tells the package what it is currently named.- When you load a module from within another script, the Python interpreter

will set

__name__to the name of the module - in this case callingimport my_modulewill set__name__tomy_module. This will cause the if statement to return false which means the code will not run when being imported from another script. - When you call the script directly from the command line the Python

interpreter will set

__name__to__main__, which means the if statement will return true, and the code underneath will run.

- When you load a module from within another script, the Python interpreter

will set

import sysis importing thesysmodule which comes bundled with all Python distributions. We’ll need thesysmodule to get access to any arguments passed into the script on the command line (in this case we passed an argument25which we want to find)fib(int(sys.argv[1]))is retrieving the first command line argument, then casting it to an integer, and then finally passing it into thefoo()function defined earlier in the module. Note that the first argument is index 1 (instead of 0) because the name of the script (my_module.py) is placed at index 0.

Summary

That was all a bit wordy so we’ll summarise again here. When you see this at the bottom of a Python module:

`if __name__ == "__main__":`9.2.1.1 Installing Python modules

For modules written by others, you will almost always use pip or conda to install them on your own system.

Most Python modules you will write yourself don’t really need to be “installed”; as long as they are in your project folder then you can import them from any other script in your project.

If you want a module to be available everywhere on your system, you need to

copy it to one of the locations in your “python path” list; you can find out

which directions are included in your path by running import sys; print(sys.path) from Python. This isn’t something that you’ll do very often.

You can learn more about Python modules from the Python Docs. If you want to go further you can learn about how to Package a Python Project so that you can share it via the Python Package Index or within your team/organisation.

9.2.2 R Packages

Packages in R are a little bit more effort than modules in Python - but you’re

also less likely to write them. Whilst you could replicate the Python workflow

in R (write a script with many functions, and different behaviour depending on

whether they’ve been imported or run directly from the command line) this would

be unique and confusing; it’s not a common workflow in R. This is largely

because R was built with extension packages in mind from the initial release,

so everyone just writes packages by default. For your own projects with many

scripts the common practice is to simply use source() to load them from other

files - this is similar to Python except that there is no

if __name__ == "__main__": section at the end of the script and therefore no

ability to call the functions from Rscript on the command line.

Thankfully writing packages in R is very easy these days, using the features in

RStudio and the devtools package. This

more formal packaging approach also means that you likely won’t have to work

with other people’s packages directly, you’ll just install them using

install.packages() and won’t have to think twice about it.

install_github() or install_bitbucket()

If you do want to learn how to write your own R package, Hadley Wickham’s R Packages is the go-to resource.

9.2.3 Unit Tests

Unit testing involves breaking your program into pieces, and subjecting each piece to a series of tests. There are a few reasons why this is a very good idea:

- By formalising how you want those pieces to behave (and then testing them to make sure they comply), you are catching your own errors before they happen

- When you make changes in future (adding features or fixing bugs) you can use your tests to make sure that the changes haven’t broken any of your existing tests

- By thinking about testing early in a project, you will tend to write better code. This is because you’ll be thinking about how to write simple, modular code because that’s going to make testing easier.

- By making it harder to write code, it encourages code re-use, and encourages you to use existing packages rather than rewriting your own code. All code is technical debt so minimising the amount of new code you write is actually a positive.

- When you’ve made sure that all of the important functions in your program have an associated test you can focus on making sure that the overall program logic is correct; you don’t need to worry about whether the functions are doing their job properly. You can move forward with the rest of your program with confidence.

The mechanics of unit testing usually looks something like this:

- Write a function

- Write out a series of tests for the function, where you call the function with hard-coded inputs, and test that the function returns the expected outputs.

- Run the tests (manually) to make sure they all pass

- If any tests fail, check the test and your code to identify if the error is a testing error or an error in your function. Fix the error and repeat until everything passes.

Assuming that you have good test coverage (the tests are covering the full range of behaviour including intended operation, edge cases, error messages, etc), then you can be confident that your function is doing what you think it is doing, and move on with writing the rest of your program. The tests that you have already written are still executed every time you test in future, which means you’ll find out straight away if a future change breaks any of your existing code.

Worked Example

We can write a couple of unit tests for the fib() function above. I’ll also

rewrite the function so that instead of printing the fibonnaci sequence, it

returns the sequence so that we can test it properly.

def fib(n):

a, b = 0, 1

output = []

while a < n:

output.append(a)

a, b = b, a+b

return output

def test_fib_non_fib_input():

assert fib(25) == [0, 1, 1, 2, 3, 5, 8, 13, 21]

def test_fib_fib_input():

assert fib(21) == [0, 1, 1, 2, 3, 5, 8, 13, 21]This code includes the revised fibonnaci function, as well as two unit tests.

The first unit test is a function which tests that fib(25) returns the

fibonnaci sequence up to 21, and the second is a function which tests that

fib(21) also returns the fibonnaci sequence up to 21. When I run these unit

tests via pytest I get the following results:

$ pytest

=============================== test session starts ===============================

platform darwin -- Python 3.7.1, pytest-4.0.2, py-1.7.0, pluggy-0.8.0

rootdir: /Users/perrystephenson/code/modules, inifile:

plugins: remotedata-0.3.1, openfiles-0.3.1, doctestplus-0.2.0, arraydiff-0.3

collected 2 items

test_module.py .F [100%]

==================================== FAILURES =====================================

_______________________________ test_fib_fib_input ________________________________

def test_fib_fib_input():

> assert fib(21) == [0, 1, 1, 2, 3, 5, 8, 13, 21]

E assert [0, 1, 1, 2, 3, 5, ...] == [0, 1, 1, 2, 3, 5, ...]

E Right contains more items, first extra item: 21

E Use -v to get the full diff

test_module.py:13: AssertionError

======================= 1 failed, 1 passed in 0.12 seconds ========================Oh no! My second test failed! If we go back and look at the function we can see

that I used while a < n:, when I should have used <=. I’ll go ahead and

make the change and re-run the tests:

$ pytest

=============================== test session starts ===============================

platform darwin -- Python 3.7.1, pytest-4.0.2, py-1.7.0, pluggy-0.8.0

rootdir: /Users/perrystephenson/code/modules, inifile:

plugins: remotedata-0.3.1, openfiles-0.3.1, doctestplus-0.2.0, arraydiff-0.3

collected 2 items

test_module.py .. [100%]

============================ 2 passed in 0.04 seconds =============================Unit testing in R is closely tied to package development - when you write a package using devtools it is fairly straight forward to write unit tests using testthat. The tests are easy to write, with a huge library of test types, and the package prints out an encouraging message every time you run your tests, just to keep you motivated!

Unit testing in Python is a bit of a mess - there are many packages that offer unit testing for Python modules and everyone seems to have a different opinion on which one is best. The pytest package is one of the more popular choices with ease-of-use and strong IDE support, and support for many different kinds of tests.

9.2.4 Documentation

One of the more important features of a module or a package is documentation. Whilst we’re not going to go into the details of how to build package documentation, we will briefly cover the standard documentation syntax for R and Python functions so that you recognise it when you see it, but also so that you can get into the habit of writing your own function documentation in a way that everyone else will recognise.

9.2.4.1 R Functions

Most R package documentation is automatically generated using the roxygen2 package. This package lets authors write their function documentation as comments with special tags, and then automatically builds the “official” R documentation. Functions which are documented using roxygen2 format look something like this:

#' The length of a string (in characters).

#'

#' @param string input character vector

#' @return numeric vector giving number of characters in each element of the

#' character vector. Missing strings have missing length.

#'

#' @examples

#' str_length(letters)

#' str_length(c("i", "like", "programming", NA))

#'

#' @export

str_length <- function(string) {

string <- check_string(string)

nc <- nchar(string, allowNA = TRUE)

is.na(nc) <- is.na(string)

nc

}The various tags in this example record the one expected parameter string,

the object that is returned from the function (a numeric vector), some examples

that the user can run to understand how to use the function, and a special

keyword @export which tells R that it should make this function available to

users of the package (rather than being a hidden internal function that users

aren’t supposed to see).

The main benefit of these roxygen2 documentation tags is that they’re stored right above the function definition which makes it really easy to check and update the documentation as you write, rather than having to update code in multiple places.

You can learn more about R package documentation here.

9.2.4.2 Python Functions

Python is a lot less strict when it comes to documentation - you can basically write anything you like as long as you write something. Python functions use a docstring for documentation, which is just a section of free text at the top of a function definition.

An example of a Python function with a docstring, from the Python documentation:

def complex(real=0.0, imag=0.0):

"""Form a complex number.

Keyword arguments:

real -- the real part (default 0.0)

imag -- the imaginary part (default 0.0)

"""

if imag == 0.0 and real == 0.0:

return complex_zero

# ... and so on ...The docstring starts and finishes with the triple double quotation marks

""" and can span multiple lines. Convention suggests you should include the

argument names, types and descriptions, as well as the output that the function

will return. You can learn more about Python docstrings

here

9.3 Programming Paradigms

It can be helpful for newcomers to R and Python to understand the two major programming paradigms that they support: Functional Programming and Object Oriented Programming. Understanding these two styles of programming will help new users to understand the why behind many of the strange syntax and style choices in popular packages as well as when reading other people’s code.

In general both R and Python support each of these styles of programming, however R is more closely aligned to Functional Programming and Python is more closely aligned to Object Oriented Programming. That is not to say that you cannot write functional code in Python or object oriented code in R, just that it is fairly rare to find a Python package that is purely functional or an R package which uses objects.

9.3.1 Functional Programming

The two key ideas behind Functional Programming, at least as far as we are concerned, are that objects are immutable and functions are pure. We’ll look at what both of these terms mean, but the main benefits from Functional Programming are that by assuminng that objects can never change, and assuming that functions will always do the same thing, it makes it very easy to understand what your code is doing.

The main reason why you need to understand Functional Programming principles is because R is a language with very strong support for Functional Programming, and because the most popular data manipulation packages (the tidyverse packages, including dplyr and purrr) are written specifically to be used in a functional way.

9.3.1.1 Objects are immutable

Immutable is an unnecessarily complex word which just means “cannot be modified”, but it is a common word in programming so it makes sense to introduce it here.

The opposite of immutable is mutable, which means that an object can be modified.In functional programming, you cannot modify objects; you may only modify copies of objects. This means that if you are writing functional code, you should not do this:

but you can write this:

In this case we have taken an existing object x, passed it into a function

mutate() which did something and created a modified copy of x, and then we

saved that copy as x_new. This follows the first rule of functional

programming, which is that objects are immutable.

You will most likely come across this in R when using dplyr pipes, where each function takes a dataframe and returns a modified copy of that dataframe.

modified_copy <- input_data %>%

mutate(new_column = some_value_1 + some_value_2) %>%

select(suburb, city, new_column)The other place where will see Functional Programming used a lot in R is when working with the apply functions in base R, or the purrr package from the tidyverse. In each case, these tools give you seriously powerful techniques that help make your code easier to read and write, as well as making the most of parallel computation, by leveraging the power of pure functions. We won’t cover purrr in this course, although you will find a link to a DataCamp course in Learning More about Functional Programming.

9.3.1.2 Functions are pure

A pure function is a function which has no side effects, which is a formal way of saying that formal functions:

- cannot access any data which has not been passed in as an argument

- cannot modify any memory, except by returning an object

This means that pure functions cannot do any of the following:

- access data from the global envionment

- modify or write data into the global environment

- print anything to the screen (they can’t do anything at all with computer inputs or outputs)

This is why when using the tidyverse packages you will find that there are pure functions and impure functions. dplyr and purr are generally made up of pure functions, whilst readr and ggplot2 functions are impure because they have side-effects (reading from and writing to files, and plotting to screen).

Functional Languages cannot eliminate Side Effects, they can only confine them. Since programs have to interface to the real world, some parts of every program must be impure. The goal is to minimize the amount of impure code and segregate it from the rest of our program.

— Charles Scalfani

In general you should always try to write pure functions because they are so much easier to debug and test, however it also pays to use common sense when following the rules. For example, all of the functions in dplyr will print helpful messages to the console, which is technically breaking the rules of a pure function but is very useful when writing and debugging your code.

9.3.1.3 Learning more about Functional Programming

You can learn more about functional programming by completing the DataCamp course Intermediate Functional Programming with Purrr. You might also like to read the So You Want To Be A Functional Programmer blog series by Charles Scalfani.

9.3.2 Object Oriented Programming

Object Oriented Programming (OOP) is far more popular than Functional Programming, with some of the world’s most popular languages like C++ and Java providing first class support for OOP. You will most frequently encounter OOP concepts when working in Python, although R actually has a few different OOP systems (S3, S4 and RC, which are explained well here).

The main reason why you need to understand OOP principles is because Python is a language with very strong support for OOP, and because the most popular data manipulation package (pandas) and the most popular machine learning package (scikit-learn) are written using the OOP paradigm.

The two key ideas behind OOP, at least as far as we are concerned, are that objects are custom data structures and methods are functions which apply to objects.

We will work through this with a code example using Python.

The first thing we need to do is create a blueprint for an object; you will see why we use these blueprints as we go through the example. In Python and most other OOP languages, we use the term class to refer to a blueprint for an object.

Let’s define a blueprint for a “training example” object which contains everything we need to know about a labelled training datapoint, which will be used to train a model. This training example object will need to have a few attributes - some of them will be common to all training examples and others will be specific to each datapoint:

- the name of the dataset (common to all points)

- a few features (unique to each point)

- a label (unique to each point)

Let’s go ahead and create the blueprint by creating a new class in Python. To start with, we will just include the attributes which are common to all training examples.

That was easy!

We can now go ahead and create a couple of objects using this class to see how it works.

Note that this looks just like calling a function - you need to include the

() after the name of the class to tell Python that you want a new object -

you’ll see why this is the case shortly. We can now take a look at these two

objects and confirm that we can indeed retrieve the attribute that we set -

the name of the dataset.

mtcars

mtcarsWe’ve successfully created a blueprint (class), then used it to create two objects using that blueprint, and finally accessed the attributes of those two objects.

Of course we still need to set some more attributes for these training examples - we need to add the features and the label which will be different for each object we create. Because it’s going to be different for each object, we’re going to need to tell Python what we want these values to be every time we create a new object.

Let’s work backwards to see how this will work. We want to be able to run this code to create new objects:

training_example_1 = TrainingExample(feature1=0.5, feature2=True, label=0)

training_example_2 = TrainingExample(feature1=0.7, feature2=True, label=1)

training_example_3 = TrainingExample(feature1=-1.5, feature2=False, label=1)In order for this to work, we need to tell Python what to do with these

arguments - we need to provide a constructor method which initializes the

attributes for each object we create. Python has a special keyname for this

method: __init__(). Inside this method we tell Python what to do every time

we create a new object from the blueprint. In this case, we want it to assign

some values to attributes inside the object.

class TrainingExample:

dataset_name = 'mtcars'

def __init__(self, feature1, feature2, label):

self.feature1 = feature1

self.feature2 = feature2

self.label = labelIn the example above we still have the class attribute “dataset_name”, but

now we have also added a constructor method which takes four arguments:

self, feature1, feature2, and label. When we write

TrainingExample(feature1=0.5, feature2=True, label=0), Python will create a

new object, then call __init__() with the object itself as the first argument

and then the three arguments we provided. The constructor method will then set

three attributes on the object (we use self.attribute = to set attributes on

an object).

We can now test out the whole script to see that it is working:

class TrainingExample:

dataset_name = 'mtcars'

def __init__(self, feature1, feature2, label):

self.feature1 = feature1

self.feature2 = feature2

self.label = label

training_example_1 = TrainingExample(feature1=0.5, feature2=True, label=0)

training_example_2 = TrainingExample(feature1=0.7, feature2=True, label=1)

training_example_3 = TrainingExample(feature1=-1.5, feature2=False, label=1)

print('training_example_1 feature1: ' + str(training_example_1.feature1))

print('training_example_2 feature1: ' + str(training_example_2.feature1))

print('training_example_3 feature1: ' + str(training_example_3.feature1))training_example_1 feature1: 0.5

training_example_2 feature1: 0.7

training_example_3 feature1: -1.5You can see that the constructor function has worked as expected, and each of the three instances of the object has its own attributes.

Finally, we need to implement some methods. Methods are just functions which apply to an object. We will write two methods for this class:

- a method to mark the example as being “train” or “test”

- a print method

Firstly, let’s write the train/test method for the class.

Now when we want to assign a training example to the train or test set, we can

just call the .set_train_test() method, like this:

We’ll also set a default value of “unassigned” in the constructor method so that it always has a value, even if we haven’t called the method:

def __init__(self, feature1, feature2, label):

self.feature1 = feature1

self.feature2 = feature2

self.label = label

self.train_test = "unassigned"And finally let’s write the print method, which will make it easier to view the object:

def print(self):

print('Feature 1: ' + str(self.feature1) +

' Feature 2: ' + str(self.feature2) +

' Label : ' + str(self.label) +

' Train/Test: ' + self.train_test)Putting it all together, this is our final script:

class TrainingExample:

dataset_name = 'mtcars'

def __init__(self, feature1, feature2, label):

self.feature1 = feature1

self.feature2 = feature2

self.label = label

self.train_test = "unassigned"

def set_train_test(self, train_test):

self.train_test = train_test

def print(self):

print('Feature 1: ' + str(self.feature1) +

' Feature 2: ' + str(self.feature2) +

' Label : ' + str(self.label) +

' Train/Test: ' + self.train_test)

training_example_1 = TrainingExample(feature1=0.5, feature2=True, label=0)

training_example_2 = TrainingExample(feature1=0.7, feature2=True, label=1)

training_example_3 = TrainingExample(feature1=-1.5, feature2=False, label=1)

training_example_1.set_train_test('train')

training_example_2.set_train_test('test')

training_example_1.print()

training_example_2.print()

training_example_3.print()And the output looks like this:

Feature 1: 0.5 Feature 2: True Label : 0 Train/Test: train

Feature 1: 0.7 Feature 2: True Label : 1 Train/Test: test

Feature 1: -1.5 Feature 2: False Label : 1 Train/Test: unassignedYou have now learned almost everything you need to know about objects! You probably won’t write them too often when first learning Python, but you’ll use attributes and methods all the time so it’s great to know what’s happening.

Some examples of where you are using objects include:

- creating new dataframes in pandas with

pd.DataFrame()- DataFrame is a class and when you create a new object usingpd.DataFrame()Python is calling the__init()__method for you - appending new data to lists with

my_list.append()- you are calling the.append()method on a list object - getting the dimensions of a pandas DataFrame with

df.shape- you are accessing the.shapeattribute of a DataFrame object

9.3.2.1 Learning more about Object Oriented Programming

You can learn more about object oriented programming by completing the DataCamp course Object-Oriented Programming in Python.

9.4 Defensive Programming

Programming is a little like driving, in the sense that you constantly have to assume that everything is going to go wrong at any moment. And just like driving, there are three kinds of threats:

- your own errors

- errors made by others

- environmental threats

This section will cover some strategies for minimising or eliminating these risks to ensure that your project has the best chance of success.

9.4.1 Assertions

Assertions are an easy way of checking that everything is going okay. Essentially an assertion is a way of declaring your expectations at various points throughout your program, and throwing an error if those expectations are not true.

In Python, assertions look like this:

If I was writing a script and I thought there was a chance that my input data was going to change, then I could write the data import like this:

import pandas as pd

df = pd.read_csv('~/data/twitter/datacamp_tweets_2018.csv')

assert df.shape[1] == 10

print('The script continues...')If my assertion evaluates to True, then the script will continue running -

great! We can check this by running the script and checking that the print

statement is executed:

If something changes in future, say if the file gets corrupted and only has 9

columns, then the assertion will evaluate to False and throw an error -

in Python this will be an AssertionError. If I manually change the file by

removing one of the columns then run the script, this is what I see:

$ python demo_assert.py

Traceback (most recent call last):

File "demo_assert.py", line 4, in <module>

assert df.shape[1] == 10

AssertionErrorThis tells me straight away that one of my expectations is no longer correct, and gives me a bunch of hints about where to look for the error. Python makes it easy to add your own hints as well - for example I could provide a comment alongside my assertion:

If this assertion fails it will print something a little more helpful:

$ python demo_assert.py

Traceback (most recent call last):

File "assert.py", line 4, in <module>

assert df.shape[1] == 10, 'datacamp_tweets_2018.csv should have 10 columns'

AssertionError: datacamp_tweets_2018.csv should have 10 columnsAssertions let you declare your expectations throughout your project, and Python will tell you if those expecations are not true, as well as passing on as many hints as it can. Assertions can help you catch bugs as you write code, they can help you prevent incorrect use of your functions (by using assertions to check function inputs), and they can tell you when your assumptions about your environment aren’t true. How helpful!

9.4.1.1 Assertions in R

Assertions do basically the same thing in every language, although in R they

are written using the stopifnot() function. Using the above example, we could

write the assertion as:

which will do exactly the same thing as the Python example - it will let the script continue executing if the assertion is correct, and will throw an error (stopping the script) if the assertion evaluates to false.

If you want the ability to write custom error messages with your assertions in

R then you can use the assert_that() function from the assertthat package.

9.4.2 Readability

Writing readable code doesn’t immediately seem like a defensive strategy, but it’s actually one of the most effective strategies for reducing risks in your own code.

9.4.2.1 Formatting and white space

At the most simple level, you can improve readability through consistent formatting. Let’s look at an example from R:

new_df = mutate(input_data, new_col = col_a + col_b)

secondDataFrame <-

new_df %>%

select(new_col, id)Both of these commands are valid R code, however they’re using wildly different formatting. For example:

- Mixed use of

<-/= - Mixed use of the magrittr pipe (

%>%) - Mixed use of newlines/indentation

- Mixed use of snake case (

snake_case) and camel case (camelCase)

Being consistent within your own project is the first step towards readability; consistent code makes it easier to spot bugs or logical errors. The same goes for the use of white space - simply adding a few spaces to line up function arguments makes it a lot easier to see when something doesn’t fit.

See the following Python code from the Object Oriented Programming example:

training_example_1 = TrainingExample(feature1=0.5234,feature2=True,label=0)

training_example_2 = TrainingExample(feature1=0.7,featrue2=True,label=1)

training_example_3 = TrainingExample(feature1=-1.5,feature2=False,label=1)I’ve added a small typo there, but it doesn’t jump out straight away. Look at how much easier it is to spot when the function arguments are aligned:

training_example_1 = TrainingExample(feature1=0.5234, feature2=True, label=0)

training_example_2 = TrainingExample(feature1=0.7, featrue2=True, label=1)

training_example_3 = TrainingExample(feature1=-1.5, feature2=False, label=1)By lining up the arguments it is far easier to see that I made a typo on the

second line - using featrue2 instead of feature2. In cases like this,

adding spaces is a really easy way to improve readability, and it barely costs

you any time at all.

9.4.2.2 Minimise repetition by writing functions

In R for Data Science, the authors argue that you should consider writing a function whenever you’ve copied and pasted a block of code more than twice (i.e. you now have three copies of the same code). The example from the book demonstrates this brilliantly, so we’ll take a look at the example here.

Consider the following example:

df <- tibble::tibble(

a = rnorm(10),

b = rnorm(10),

c = rnorm(10),

d = rnorm(10)

)

df$a <- (df$a - min(df$a, na.rm = TRUE)) /

(max(df$a, na.rm = TRUE) - min(df$a, na.rm = TRUE))

df$b <- (df$b - min(df$b, na.rm = TRUE)) /

(max(df$b, na.rm = TRUE) - min(df$a, na.rm = TRUE))

df$c <- (df$c - min(df$c, na.rm = TRUE)) /

(max(df$c, na.rm = TRUE) - min(df$c, na.rm = TRUE))

df$d <- (df$d - min(df$d, na.rm = TRUE)) /

(max(df$d, na.rm = TRUE) - min(df$d, na.rm = TRUE))Not only is it very hard to see what the code is doing here, but there is also a bug due a typo - copying and pasting is dangerous! The author made an error when copying-and-pasting the code for df$b: they forgot to change an a to a b. Extracting repeated code out into a function is a good idea because it prevents you from making this type of mistake.

Consider this alternative code, which does the same thing:

rescale01 <- function(x) {

rng <- range(x, na.rm = TRUE)

(x - rng[1]) / (rng[2] - rng[1])

}

df$a <- rescale01(df$a)

df$b <- rescale01(df$b)

df$c <- rescale01(df$c)

df$d <- rescale01(df$d)Now you can just look at the rescale01 function once to see what it does (it

rescales the column to values between 0 and 1), and quickly identify that it is

being applied to each of the four columns in exactly the same way - no more

bugs due to typos!

The functions chapter from R for Data Science contains more great examples of how you can improve readability by using functions.

9.4.2.3 Style Guides

If your organisation has a formal style guide, or even a set of informal conventions which are used by your team, then you should adhere to those guides or conventions. If they don’t, then R users should consider adopting the style guide presented in Hadley Wickham’s Advanced R book, and Python users should adopt PEP-008

As a new R user, you should try to get in the habit of using the tidyverse packages whenever possible (dplyr, ggplot2, readr, tidyr, purrr, etc) as these packages help to improve readability, and they’re also a lot easier to learn. You may see other R users who use a lot of base R or alternative packages like data.table, however these are largely hangovers from before the tidyverse was available.

As a new Python user, you should focus on using pandas and numpy wherever possible, and generally conform to the conventions used by authors of other packages. For example, when using PyTorch you should look for example code made available by the PyTorch team and adopt their style unless there is a good reason not to.

9.4.2.4 Packaging

As your project grows and the number of functions increases, it is often worth bundling your code as a module (Python) or package (R), regardless of whether or not you ever intend to re-use any of the code in other projects. The benefits of using these frameworks include:

- Improved reproducibility

- Easy to write documentation for your functions

- Easy to implement unit testing

- Recording dependencies, including specific versions of packages if needed

- Improved collaboration in larger teams

9.4.2.5 Assessing readability

It can be hard to quantify code readability, which in turn makes it hard to assess your own code and identify opportunities for improvement. One way to think of code readability is “minimising WTF moments” for anyone reading your code for the first time. Putting yourself into someone else’s shoes and thinking about which bits of your code are the most confusing will quickly help you to narrow down on sections of you code which need improvement.

9.4.3 Code Reviews

Going back to the driving analogy, once you’re confident enough (and licensed) to drive without supervision it’s really easy to forget about asking for feedback from people with more skills and experience. The same thing goes for coding - it can be hard to ask for a review, and it can be even harder to take feedback. But just because it’s hard doesn’t mean you shouldn’t do it - if you want to improve your skills then it is critical that you ask software engineers and domain experts to review your code and processes. More senior data scientists might also be able to help here, but it’s normally more valuable to get feedback from software engineers (who know more about programming than you) and domain experts (who understand less about programming but more about the business logic). Software engineers will help to extend your skills and can share the benefits of experience; domain experts can help you identify opportunities for improving readability, improving your communications strategy, and fine tuning high level program logic.

You should ask for feedback continuously, and accept feedback gratefully!

A tip for receiving advice that requires major changes to your code: remember that code is free and source control is a permanent record of your work. Don’t be afraid to delete functions or throw away bad code - using git means you can confidently delete code with ability to easily get it back later if you really need it.

9.5 Continuous Improvement

Above all, the best way to improve your programming is to practice. Using a programming language every day is a guaranteed way to make you better at programming. But there are some other things you can do to help accelerate your learning:

- Read other people’s code. Look at code written by senior colleagues, look at how your favourite packages are written, look at how the latest hyped-up deep learning model is implemented. The more code you read, the more different styles you will be exposed to, and the more examples you will have to draw on when writing your own code. You’ll probably need to spend most of the time googling to understand what you’re seeing at first, but stick with it!

- Read blogs by leading data scientists to understand what they’re doing, what they’re learning, how they’re approaching new problems, and what tools and packages they are using.

- Read research code (from Google, Microsoft, etc) to understand how professional engineers use code to implement cutting edge machine learning techniques

- Read bad code! As you improve you will get better at identifying “bad” practices, and by practicing on other people’s code you will get better at catching bad practices in your own code.

- Read your old code and see if you can understand it (and then improve it!).

- See if you can replicate your work on another computer. Reproducibility is critical for data science, so you should test your code to make sure it is reproducible.

- Write assertions and unit tests.

- Challenge yourself to replace other tools in your daily workflow (e.g. Excel, Tableau) with R or Python.

9.6 Technical Debt

Technical debt is a concept that all data scientists should understand. The Wikipedia summary is a good one so we’ll take a look at it here:

Technical debt (also known as design debt or code debt) is a concept in software development that reflects the implied cost of additional rework caused by choosing an easy solution now instead of using a better approach that would take longer.

Technical debt can be compared to monetary debt. If technical debt is not repaid, it can accumulate ‘interest’, making it harder to implement changes later on.

Technical debt is not necessarily a bad thing, and sometimes (e.g., as a proof-of-concept) technical debt is required to move projects forward.

Technical debt is always a trade-off, because the very act of writing code will increase your technical debt! Think about the tools you use on your computer, and try to guess how long ago the code for those tools was written. How many of your tools were created in the last year? Five years? Ten years? Most code has an expiry date, which means that every line of code you write in a production system is increasing technical debt. This re-enforces the point that technical debt is not necessarily a bad thing, but it is something you should be aware of when making decisions about design and project prioritisation.

You will normally encounter technical debt when thinking “do I have to write this code properly, or can I leave it messy as long as it gets the job done?”. There isn’t necessarily a problem with writing messy code, but it’s worth keeping the concept of technical debt in mind as a way to think about the down-side risk associated with quick and dirty solutions. The same thing goes for decisions around letting data scientists put code into production, rather than engaging professional programmers. Data scientists normally can write code for production systems, but doing so will create more technical debt than if the code was written by a profressional software engineer.

9.7 Further Reading

Although already linked above, the presentation by Joel Grus about why he hates notebooks is definitely worth reading. By understanding his very valid complaints about notebooks, you can think about ways to mitigate these issues in your own work. LINK.

Joel also presented more recently about how his learnings from software engineering have helped him become a better data scientist. LINK

I linked a blog by Vicky Boykis at the start of this module, but it’s really important so I will link it again here. This blog lays out the case for why it is so important that you learn at least one programming language really well.